HOTSPOT -

You train a classification model by using a decision tree algorithm.

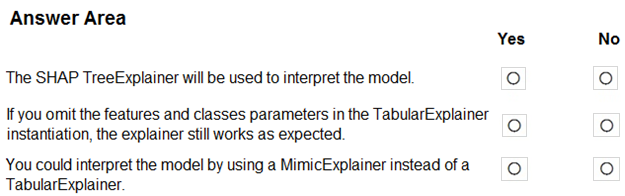

You create an estimator by running the following Python code. The variable feature_names is a list of all feature names, and class_names is a list of all class names. from interpret.ext.blackbox import TabularExplainer explainer = TabularExplainer(model, x_train, features=feature_names, classes=class_names)

You need to explain the predictions made by the model for all classes by determining the importance of all features.

For each of the following statements, select Yes if the statement is true. Otherwise, select No.

NOTE: Each correct selection is worth one point.

Hot Area:

claudiapatricia777

Highly Voted 3 years agoBen999

3 months, 4 weeks agodushmantha

Highly Voted 3 years, 1 month agodeyoz

8 months, 3 weeks agodeyoz

8 months, 3 weeks agohaby

Most Recent 10 months, 1 week agohaby

10 months, 1 week agophdykd

1 year, 8 months agophdykd

1 year, 8 months agocasiopa

1 year, 10 months agopancman

2 years, 6 months agodija123

2 years, 10 months agoazayra

2 years, 12 months agosnsnsnsn

3 years, 1 month agosaurabh288

3 years, 3 months agoljljljlj

3 years, 3 months agoSrik33

3 years, 3 months agoYipingRuan

3 years, 3 months agothhvancouver

3 years, 2 months ago