HOTSPOT -

You build an Azure Data Factory pipeline to move data from an Azure Data Lake Storage Gen2 container to a database in an Azure Synapse Analytics dedicated

SQL pool.

Data in the container is stored in the following folder structure.

/in/{YYYY}/{MM}/{DD}/{HH}/{mm}

The earliest folder is /in/2021/01/01/00/00. The latest folder is /in/2021/01/15/01/45.

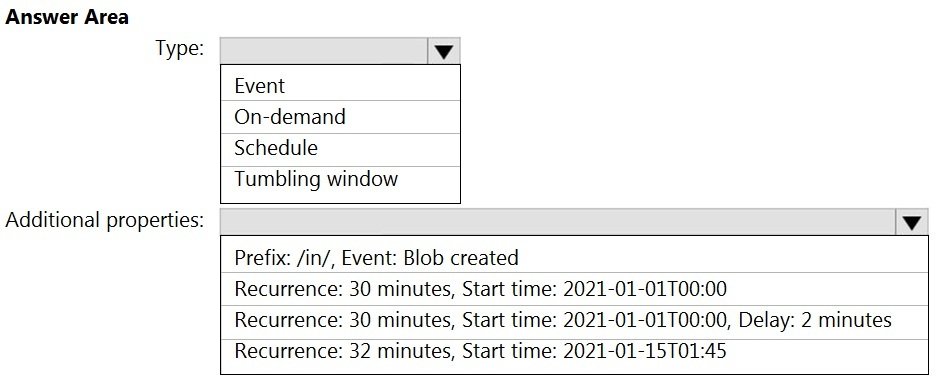

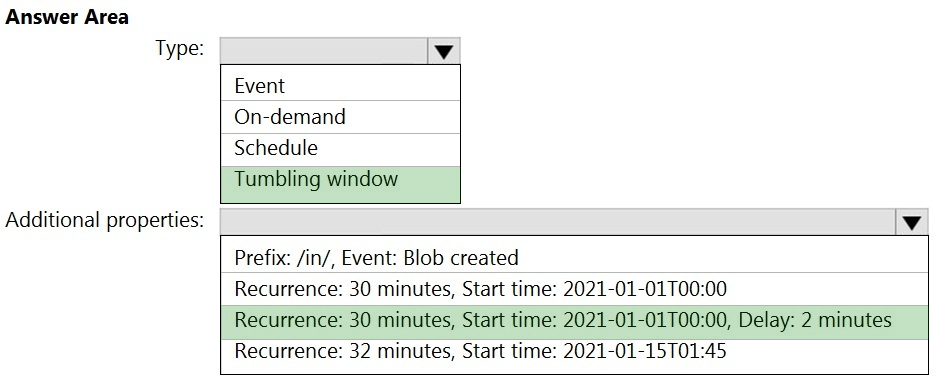

You need to configure a pipeline trigger to meet the following requirements:

✑ Existing data must be loaded.

✑ Data must be loaded every 30 minutes.

✑ Late-arriving data of up to two minutes must be included in the load for the time at which the data should have arrived.

How should you configure the pipeline trigger? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Puneetgupta003

Highly Voted 3 years, 4 months agopositivitypeople

Highly Voted 10 months agoAlongi

Most Recent 6 months, 3 weeks agokkk5566

1 year, 1 month agoDeeksha1234

2 years, 2 months agoStudentFromAus

2 years, 4 months agoparx

2 years, 6 months agoazurearmy

2 years, 11 months agoaaaaaaaan

2 years, 11 months agobelha

3 years, 3 months agocaptainbee

3 years, 3 months agoescoins

3 years, 3 months agoPodavenna

3 years, 1 month ago