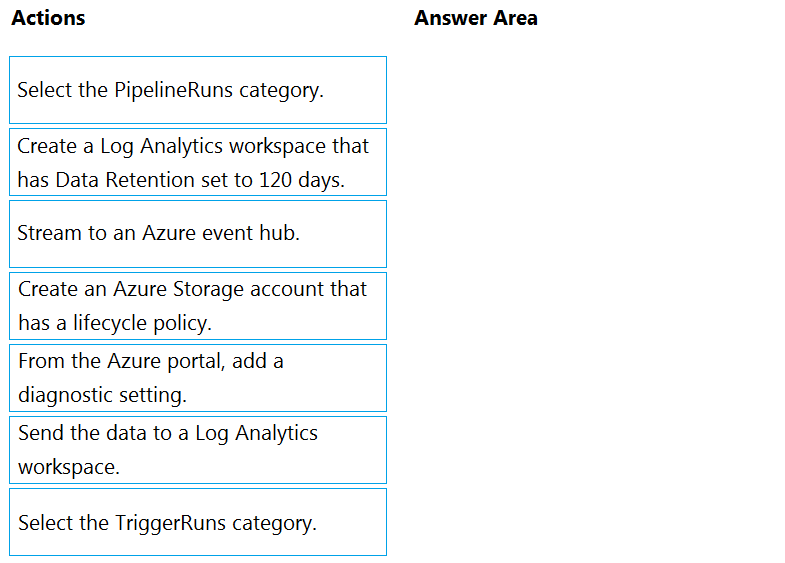

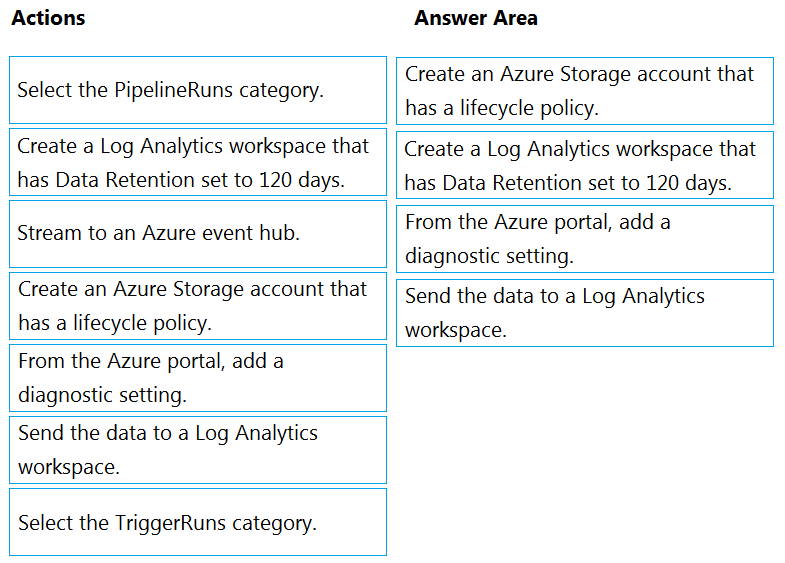

DRAG DROP -

You have an Azure data factory.

You need to ensure that pipeline-run data is retained for 120 days. The solution must ensure that you can query the data by using the Kusto query language.

Which four actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

NOTE: More than one order of answer choices is correct. You will receive credit for any of the correct orders you select.

Select and Place:

Sunnyb

Highly Voted 3 years, 10 months agorainbowyu

3 years, 2 months agoDeeksha1234

2 years, 8 months agokkk5566

1 year, 7 months agoRajashekharc

2 years, 7 months agoherculian_effort

Highly Voted 3 years, 9 months agoArmandoo

3 years, 8 months agoLiLy91

3 years, 3 months agoBK10

3 years, 2 months agoTowin

2 years, 10 months agobe8a152

Most Recent 1 year, 2 months agoSriramiyer92

2 years, 8 months agoNamitSehgal

2 years, 10 months ago[Removed]

3 years, 7 months agoAmalbenrebai

3 years, 8 months agomss1

3 years, 8 months agomss1

3 years, 8 months agoMarcus1612

3 years, 7 months agomric

3 years, 9 months agodet_wizard

3 years, 10 months agoteofz

3 years, 11 months agosagga

3 years, 11 months agoAmsterliese

3 years ago