HOTSPOT -

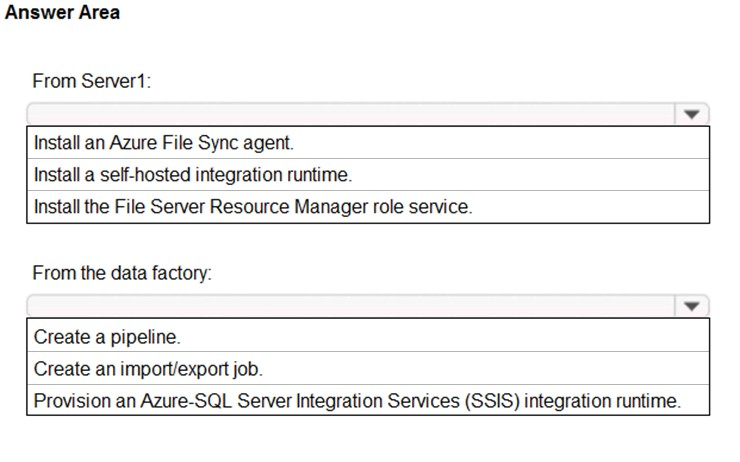

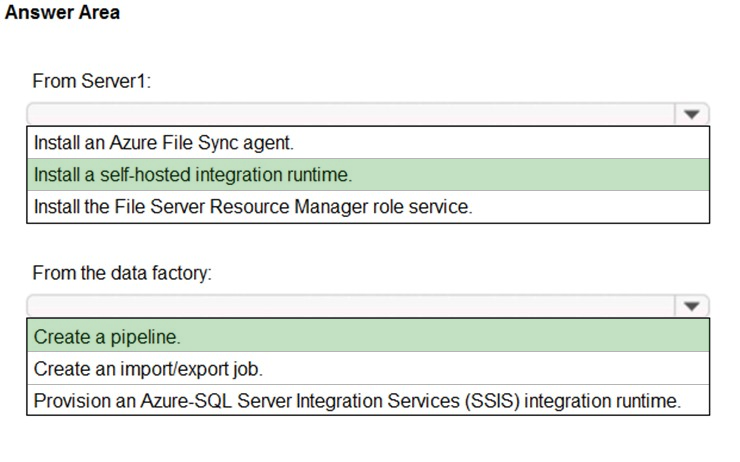

You on-premises network contains a file server named Server1 that stores 500 GB of data.

You need to use Azure Data Factory to copy the data from Server1 to Azure Storage.

You add a new data factory.

What should you do next? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

verbatim86

Highly Voted 4 years, 1 month agoBenBen

Highly Voted 4 years, 1 month agosunmonkey

3 years, 12 months agodemonite

3 years, 11 months agopentium75

3 years, 7 months agoanthonyphuc

3 years, 4 months agotteesstt

3 years, 6 months agowwwmmm

2 years, 3 months agoHarald105

Most Recent 3 years, 4 months agosyu31svc

3 years, 7 months agopentium75

3 years, 7 months agomahwish

3 years, 10 months agoarytech

3 years, 10 months agoSnakePlissken

3 years, 10 months agoawalao

3 years, 4 months agonabylion

4 years, 1 month agolawry

3 years, 7 months agoprashantjoge

4 years, 1 month agoMikie889

4 years, 1 month ago