A Data Scientist is developing a machine learning model to classify whether a financial transaction is fraudulent. The labeled data available for training consists of

100,000 non-fraudulent observations and 1,000 fraudulent observations.

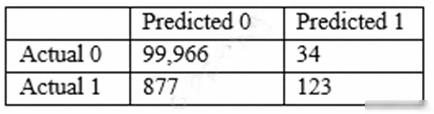

The Data Scientist applies the XGBoost algorithm to the data, resulting in the following confusion matrix when the trained model is applied to a previously unseen validation dataset. The accuracy of the model is 99.1%, but the Data Scientist needs to reduce the number of false negatives.

Which combination of steps should the Data Scientist take to reduce the number of false negative predictions by the model? (Choose two.)

LydiaGom

Highly Voted 3 years, 2 months agoovokpus

Highly Voted 3 years agorb39

2 years, 10 months agoMJSY

Most Recent 9 months, 2 weeks agoloict

1 year, 10 months agoMickey321

1 year, 10 months agoMickey321

1 year, 10 months agoMllb

2 years, 3 months agoAjoseO

2 years, 3 months agoTomatoteacher

2 years, 6 months agoShailendraa

2 years, 10 months agoovokpus

3 years agoNeverMinda

3 years, 1 month agoMLGuru

3 years, 2 months ago