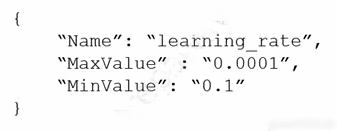

A machine learning (ML) specialist is using Amazon SageMaker hyperparameter optimization (HPO) to improve a model's accuracy. The learning rate parameter is specified in the following HPO configuration:

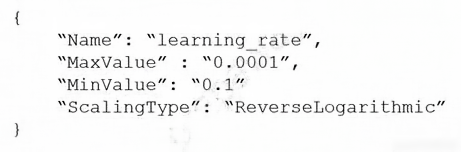

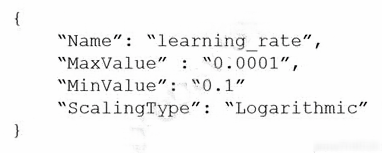

During the results analysis, the ML specialist determines that most of the training jobs had a learning rate between 0.01 and 0.1. The best result had a learning rate of less than 0.01. Training jobs need to run regularly over a changing dataset. The ML specialist needs to find a tuning mechanism that uses different learning rates more evenly from the provided range between MinValue and MaxValue.

Which solution provides the MOST accurate result?

Select the most accurate hyperparameter configuration form this HPO job.

Select the most accurate hyperparameter configuration form this HPO job.

Select the most accurate hyperparameter configuration form this training job.

Select the most accurate hyperparameter configuration form this training job.

Select the most accurate hyperparameter configuration form these three HPO jobs.

Select the most accurate hyperparameter configuration form these three HPO jobs.

ovokpus

Highly Voted 1 year, 10 months agoedvardo

Highly Voted 1 year, 11 months agoDJiang

1 year, 11 months ago587df71

4 months agokaike_reis

Most Recent 8 months, 2 weeks agocox1960

12 months agoMllb

1 year agoovokpus

1 year, 10 months agorhuanca

1 year, 11 months ago